Tips

Go

(18条消息) Go语言自学系列 | golang包_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang并发编程之channel的遍历_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang并发编程之select switch_COCOgsta的博客-CSDN博客_golang select switch

(18条消息) Go语言自学系列 | golang并发编程之runtime包_COCOgsta的博客-CSDN博客_golang runtime包

(18条消息) Go语言自学系列 | golang接口值类型接收者和指针类型接收者_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang并发编程之Timer_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang方法_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang并发编程之WaitGroup实现同步_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang构造函数_COCOgsta的博客-CSDN博客_golang 构造函数

(18条消息) Go语言自学系列 | golang方法接收者类型_COCOgsta的博客-CSDN博客_golang 方法接收者

(18条消息) Go语言自学系列 | golang接口_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang接口和类型的关系_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang结构体_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang结构体_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang标准库os模块 - File文件读操作_COCOgsta的博客-CSDN博客_golang os.file

(18条消息) Go语言自学系列 | golang继承_COCOgsta的博客-CSDN博客_golang 继承

(18条消息) Go语言自学系列 | golang嵌套结构体_COCOgsta的博客-CSDN博客_golang 结构体嵌套

(18条消息) Go语言自学系列 | golang并发编程之Mutex互斥锁实现同步_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang并发变成之通道channel_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang并发编程之原子操作详解_COCOgsta的博客-CSDN博客_golang 原子操作

(18条消息) Go语言自学系列 | golang并发编程之原子变量的引入_COCOgsta的博客-CSDN博客_go 原子变量

(18条消息) Go语言自学系列 | golang并发编程之协程_COCOgsta的博客-CSDN博客_golang 协程 并发

(18条消息) Go语言自学系列 | golang接口嵌套_COCOgsta的博客-CSDN博客_golang 接口嵌套

(18条消息) Go语言自学系列 | golang包管理工具go module_COCOgsta的博客-CSDN博客_golang 包管理器

(18条消息) Go语言自学系列 | golang标准库os模块 - File文件写操作_COCOgsta的博客-CSDN博客_go os模块

(18条消息) Go语言自学系列 | golang结构体的初始化_COCOgsta的博客-CSDN博客_golang 结构体初始化

(18条消息) Go语言自学系列 | golang通过接口实现OCP设计原则_COCOgsta的博客-CSDN博客

(18条消息) Go语言自学系列 | golang标准库os包进程相关操作_COCOgsta的博客-CSDN博客_golang os包

(18条消息) Go语言自学系列 | golang标准库ioutil包_COCOgsta的博客-CSDN博客_golang ioutil

(18条消息) Go语言自学系列 | golang标准库os模块 - 文件目录相关_COCOgsta的博客-CSDN博客_go语言os库

Golang技术栈,Golang文章、教程、视频分享!

(18条消息) Go语言自学系列 | golang结构体指针_COCOgsta的博客-CSDN博客_golang 结构体指针

go 协程泄漏 pprof

Ansible

太厉害了,终于有人能把Ansible讲的明明白白了,建议收藏_互联网老辛

ansible.cfg配置详解

Docker

Docker部署

linux安装docker和Docker Compose

linux 安装 docker

Docker中安装Docker遇到的问题处理

Docker常用命令

docker常用命令小结

docker 彻底卸载

Docker pull 时报错:Get https://registry-1.docker.io/v2/library/mysql: net/http: TLS handshake timeout

Docker 拉镜像无法访问 registry-x.docker.io 问题(Centos7)

docker 容器内没有权限

Linux中关闭selinux的方法是什么?

docker run 生成 docker-compose

Docker覆盖网络部署

docker pull后台拉取镜像

docker hub

docker 中启动 docker

docker in docker 启动命令

Redis

Redis 集群别乱搭,这才是正确的姿势

linux_离线_redis安装

怎么实现Redis的高可用?(主从、哨兵、集群) - 雨点的名字 - 博客园

redis集群离线安装

always-show-logo yes

Redis集群搭建及原理

[ERR] Node 172.168.63.202:7001 is not empty. Either the nodealready knows other nodes (check with CLUSTER NODES) or contains some - 亲爱的不二999 - 博客园

Redis daemonize介绍

redis 下载地址

Redis的redis.conf配置注释详解(三) - 云+社区 - 腾讯云

Redis的redis.conf配置注释详解(一) - 云+社区 - 腾讯云

Redis的redis.conf配置注释详解(二) - 云+社区 - 腾讯云

Redis的redis.conf配置注释详解(四) - 云+社区 - 腾讯云

Linux

在终端连接ssh的断开关闭退出的方法

漏洞扫描 - 灰信网(软件开发博客聚合)

find 命令的参数详解

vim 编辑器搜索功能

非root安装rpm时,mockbuild does not exist

Using a SSH password instead of a key is not possible because Host Key checking

(9条消息) 安全扫描5353端口mDNS服务漏洞问题_NamiJava的博客-CSDN博客_5353端口

Linux中使用rpm命令安装rpm包

ssh-copy-id非22端口的使用方法

How To Resolve SSH Weak Key Exchange Algorithms on CentOS7 or RHEL7 - infotechys.com

Linux cp 命令

yum 下载全量依赖 rpm 包及离线安装(终极解决方案) - 叨叨软件测试 - 博客园

How To Resolve SSH Weak Key Exchange Algorithms on CentOS7 or RHEL7 - infotechys.com

RPM zlib 下载地址

运维架构网站

欢迎来到 Jinja2

/usr/local/bin/ss-server -uv -c /etc/shadowsocks-libev/config.json -f /var/run/s

ruby 安装Openssl 默认安装位置

Linux 常用命令学习 | 菜鸟教程

linux 重命名文件和文件夹

linux命令快速指南

ipvsadm

Linux 下查找日志中的关键字

Linux 切割大 log 日志

CentOS7 关于网络的设置

rsync 命令_Linux rsync 命令用法详解:远程数据同步工具

linux 可视化界面安装

[问题已处理]-执行yum卡住无响应

GCC/G++升级高版本

ELK

Docker部署ELK

ELK+kafka+filebeat+Prometheus+Grafana - SegmentFault 思否

(9条消息) Elasticsearch设置账号密码_huas_xq的博客-CSDN博客_elasticsearch设置密码

Elasticsearch 7.X 性能优化

Elasticsearch-滚动更新

Elasticsearch 的内存优化_大数据系统

Elasticsearch之yml配置文件

ES 索引为Yellow状态

Logstash:Grok filter 入门

logstash grok 多项匹配

Mysql

Mysql相关Tip

基于ShardingJDBC实现数据库读写分离 - 墨天轮

MySQL-MHA高可用方案

京东三面:我要查询千万级数据量的表,怎么操作?

OpenStack

(16条消息) openstack项目中遇到的各种问题总结 其二(云主机迁移、ceph及扩展分区)_weixin_34104341的博客-CSDN博客

OpenStack组件介绍

百度大佬OpenStack流程

openstack各组件介绍

OpenStack生产实际问题总结(一)

OpenStack Train版离线部署

使用Packstack搭建OpenStack

K8S

K8S部署

K8S 集群部署

kubeadm 重新 init 和 join-pudn.com

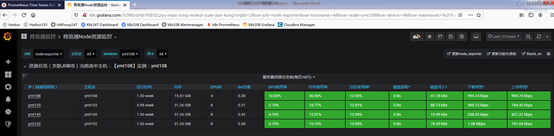

Kubernetes 实战总结 - 阿里云 ECS 自建 K8S 集群 Kubernetes 实战总结 - 自定义 Prometheus

【K8S实战系列-清理篇1】k8s docker 删除没用的资源

Flannel Pod Bug汇总

Pod创建流程代码版本[kubelet篇]

Java

Jdk 部署

JDK部署

java线程池ThreadPoolExecutor类使用详解 - bigfan - 博客园

ShardingJDBC实现多数据库节点分库分表 - 墨天轮

Maven Repository: Search/Browse/Explore

其他

Git在阿里,我们如何管理代码分支?

chrome F12调试网页出现Paused in debugger

体验IntelliJ IDEA的远程开发(Remote Development) - 掘金

Idea远程调试

PDF转MD

强哥分享干货

优秀开源项目集合

vercel 配合Github 搭建项目Doc门户

如何用 Github Issues 写技术博客?

Idea 2021.3 Maven 3.8.1 报错 Blocked mirror for repositories 解决

列出maven依赖

[2022-09 持续更新] 谷歌 google 镜像 / Sci-Hub 可用网址 / Github 镜像可用网址总结

阿里云ECS迁移

linux访问github

一文教你使用 Docker 启动并安装 Nacos-腾讯云开发者社区-腾讯云

远程本地多用户桌面1.17(一种不让电脑跟你抢键鼠的思路) - 哔哩哔哩

Nginx

Nginx 部署

Nginx 部署安装

Nginx反向代理cookie丢失的问题_longzhoufeng的博客-CSDN博客_nginx 代理后cookie丢失

Linux 系统 Https 证书生成与Nginx配置 https

数据仓库

实时数仓

松果出行 x StarRocks:实时数仓新范式的实践之路

实时数据仓库的一些分层和分层需要处理的事情,以及数据流向

湖仓一体电商项目

湖仓一体电商项目(一):项目背景和架构介绍

湖仓一体电商项目(二):项目使用技术及版本和基础环境准备

湖仓一体电商项目(三):3万字带你从头开始搭建12个大数据项目基础组件

数仓笔记

数仓学习总结

数仓常用平台和框架

数仓学习笔记

数仓技术选型

尚硅谷教程

尚硅谷学习笔记

尚硅谷所有已知的课件资料

尚硅谷大数据项目之尚品汇(11数据质量管理V4.0)

尚硅谷大数据项目之尚品汇(10元数据管理AtlasV4.0)

尚硅谷大数据项目之尚品汇(9权限管理RangerV4.0)

尚硅谷大数据项目之尚品汇(8安全环境实战V4.0)

尚硅谷大数据项目之尚品汇(7用户认证KerberosV4.1)

尚硅谷大数据项目之尚品汇(6集群监控ZabbixV4.1)

尚硅谷大数据项目之尚品汇(5即席查询PrestoKylinV4.0)

尚硅谷大数据项目之尚品汇(4可视化报表SupersetV4.0)

尚硅谷大数据项目之尚品汇(3数据仓库系统)V4.2.0

尚硅谷大数据项目之尚品汇(2业务数据采集平台)V4.1.0

尚硅谷大数据项目之尚品汇(1用户行为采集平台)V4.1.0

数仓治理

数据中台 元数据规范

数据中台的那些 “经验与陷阱”

2万字详解数据仓库数据指标数据治理体系建设方法论

数据仓库,为什么需要分层建设和管理? | 人人都是产品经理

网易数帆数据治理演进

数仓技术

一文看懂大数据生态圈完整知识体系

阿里云—升舱 - 数据仓库升级白皮书

最全企业级数仓建设迭代版(4W字建议收藏)

基于Hue,Dolphinscheduler,HIVE分析数据仓库层级实现及项目需求案例实践分析

详解数据仓库分层架构

数据仓库技术细节

大数据平台组件介绍

总览 2016-2021 年全球机器学习、人工智能和大数据行业技术地图

Apache DolphinScheduler 3.0.0 正式版发布!

数据仓库面试题——介绍下数据仓库

数据仓库为什么要分层,各层的作用是什么

Databend v0.8 发布,基于 Rust 开发的现代化云数据仓库 - OSCHINA - 中文开源技术交流社区

数据中台

数据中台设计

大数据同步工具之 FlinkCDC/Canal/Debezium 对比

有数数据开发平台文档

Shell

Linux Shell 命令参数

shell 脚本编程

一篇教会你写 90% 的 Shell 脚本

Kibana

Kibana 查询语言(KQL)

Kibana:在 Kibana 中的四种表格制作方式

Kafka

Kafka部署

canal 动态监控 Mysql,将 binlog 日志解析后,把采集到的数据发送到 Kafka

OpenApi

OpenAPI 标准规范,了解一下?

OpenApi学术论文

贵阳市政府数据开放平台设计与实现

OpenAPI简介

开放平台:运营模式与技术架构研究综述

管理

技术部门Leader是不是一定要技术大牛担任?

华为管理体系流程介绍

DevOps

*Ops

XOps 已经成为一个流行的术语 - 它是什么?

Practical Linux DevOps

Jenkins 2.x实践指南 (翟志军)

Jenkins 2权威指南 ((美)布伦特·莱斯特(Brent Laster)

DevOps组件高可用的思路

KeepAlived

VIP + KEEPALIVED + LVS 遇到Connection Peer的问题的解决

MinIO

MinIO部署

Minio 分布式集群搭建部署

Minio 入门系列【16】Minio 分片上传文件 putObject 接口流程源码分析

MinioAPI 浅入及问题

部署 minio 兼容 aws S3 模式

超详细分布式对象存储 MinIO 实战教程

Hadoop

Hadoop 部署

Hadoop集群部署

windows 搭建 hadoop 环境(解决 HADOOP_HOME and hadoop.home.dir are unset

Hadoop 集群搭建和简单应用(参考下文)

Hadoop 启动 NameNode 报错 ERROR: Cannot set priority of namenode process 2639

jps 命令查看 DataNode 进程不见了 (hadoop3.0 亲测可用)

hadoop 报错: Operation category READ is not supported in state standby

Spark

Spark 部署

Spark 集群部署

spark 心跳超时分析 Cannot receive any reply in 120 seconds

Spark学习笔记

apache spark - Failed to find data source: parquet, when building with sbt assembly

Spark Thrift Server 架构和原理介绍

InLong

InLong 部署

Apache InLong部署文档

安装部署 - Docker 部署 - 《Apache InLong v1.2 中文文档》 - 书栈网 · BookStack

基于 Apache Flink SQL 的 InLong Sort ETL 方案解析

关于 Apache Pulsar 在 Apache InLong 接入数据

zookeeper

zookeeper 部署

使用 Docker 搭建 Zookeeper 集群

美团技术团队

StarRocks

StarRocks技术白皮书(在线版)

JuiceFS

AI 场景存储优化:云知声超算平台基于 JuiceFS 的存储实践

JuiceFS 在 Elasticsearch/ClickHouse 温冷数据存储中的实践

JuiceFS format

元数据备份和恢复 | JuiceFS Document Center

JuiceFS 元数据引擎选型指南

Apache Hudi 使用文件聚类功能 (Clustering) 解决小文件过多的问题

普罗米修斯

k8s 之 Prometheus(普罗米修斯)监控,简单梳理下 K8S 监控流程

k8s 部署 - 使用helm3部署监控prometheus(普罗米修斯),从零到有,一文搞定

k8s 部署 - 使用 helm3 部署监控 prometheus(普罗米修斯),从零到有,一文搞定

k8s 部署 - 如何完善 k8s 中 Prometheus(普罗米修斯)监控项目呢?

k8s 部署 - k8s 中 Prometheus(普罗米修斯)的大屏展示 Grafana + 监控报警

zabbix

一文带你掌握 Zabbix 监控系统

Stream Collectors

Nvidia

Nvidia API

CUDA Nvidia驱动安装

NVIDIA驱动失效简单解决方案:NVIDIA-SMI has failed because it couldn‘t communicate with the NVIDIA driver.

ubuntu 20 CUDA12.1安装流程

nvidia开启持久化模式

nvidia-smi 开启持久化

Harbor

Harbor部署文档

Docker 爆出 it doesn't contain any IP SANs

pandoc

其他知识

大模型

COS 597G (Fall 2022): Understanding Large Language Models

如何优雅的使用各类LLM

ChatGLM3在线搜索功能升级

当ChatGLM3能用搜索引擎时

OCR神器,PDF、数学公式都能转

Stable Diffusion 动画animatediff-cli-prompt-travel

基于ERNIE Bot自定义虚拟数字人生成

pika负面提示词

开通GPT4的方式

GPT4网站

低价开通GPT Plus

大模型应用场景分享

AppAgent AutoGPT变体

机器学习

最大似然估计

权衡偏差(Bias)和方差(Variance)以最小化均方误差(Mean Squared Error, MSE)

伯努利分布

方差计算公式

均值的高斯分布估计

没有免费午餐定理

贝叶斯误差

非参数模型

最近邻回归

表示容量

最优容量

权重衰减

正则化项

Sora

Sora官方提示词

看完32篇论文,你大概就知道Sora如何炼成? |【经纬低调出品】

Sora论文

Sora 物理悖谬的几何解释

Sora 技术栈讨论

RAG垂直落地

DB-GPT与TeleChat-7B搭建相关RAG知识库

ChatWithRTX

ChatRTX安装教程

ChatWithRTX 踩坑记录

ChatWithRTX 使用其他量化模型

ChatWithRTX介绍

RAG 相关资料

英伟达—大模型结合 RAG 构建客服场景自动问答

又一大模型技术开源!有道自研RAG引擎QAnything正式开放下载

收藏!RAG入门参考资料开源大总结:RAG综述、介绍、比较、预处理、RAG Embedding等

RAG调研

解决现代RAG实际生产问题

解决现代 RAG 系统中的生产问题-II

Modular RAG and RAG Flow: Part Ⅰ

Modular RAG and RAG Flow: Part II

先进的Retriever技术来增强你的RAGs

高级RAG — 使用假设文档嵌入 (HyDE) 改进检索

提升 RAG:选择最佳嵌入和 Reranker 模型

LangGraph

增强型RAG:re-rank

LightRAG:使用 PyTorch 为 LLM 应用程序提供支持

RAG 101:分块策略

模型训练

GPU相关资料

[教程] conda安装简明教程(基于miniconda和Windows)

PyTorch CUDA对应版本 | PyTorch

资料

李一舟课程全集

零碎资料

苹果各服共享ID

数据中心网络技术概览

华为大模型训练学习笔记

百度AIGC工程师认证考试答案(可换取工信部证书)

百度智能云生成式AI认证工程师 考试和证书查询指南

深入理解 Megatron-LM(1)基础知识

QAnything

接入QAnything的AI问答知识库,可私有化部署的企业级WIKI知识库

wsl --update失效Error code: Wsl/UpdatePackage/0x80240438的解决办法

Docker Desktop 启动docker engine一直转圈解决方法

win10开启了hyper-v,docker 启动还是报错 docker desktop windows hypervisor is not present

WSL虚拟磁盘过大,ext4迁移 Windows 中创建软链接和硬链接

WSL2切换默认的Linux子系统

Windows的WSL子系统,自动开启sshd服务

新版docker desktop设置wsl(使用windown的子系统)

WSL 开启ssh

Windows安装网易开源QAnything打造智能客服系统

芯片

国内互联网大厂自研芯片梳理

超算平台—算力供应商

Linux 磁盘扩容

Linux使用growpart工具进行磁盘热扩容(非LVM扩容方式)

关于centos7 扩容提示no tools available to resize disk with 'gpt' - o夜雨随风o - 博客园

(小插曲)neo4j配置apoc插件后检查版本发现:Unknown function ‘apoc.version‘ “EXPLAIN RETURN apoc.version()“

KubeVirt

vnc server配置、启动、重启与连接 - 王约翰 - 博客园

虚拟机Bug解决方案

kubevirt 如何通过CDI上传镜像文件

在 K8S 上也能跑 VM!KubeVirt 簡介與建立(部署篇) | Cloud Solutions

KubeVirt 04:容器化数据导入 – 小菜园

Python

安装 flash_attn

手把手教你在linux上安装pytorch与cuda

AI

在启智社区基于PyTorch运行国产算力卡的模型训练实验

Scaling law

免费的GPT3.5 API

AI Engineer Roadmap & Resources 🤖

模型排行

edk2

K8S删除Evicted状态的pod

abrt-ccpp干崩服务器查询记录

kernel 各版本下载地址

2025 年工作

671B DeepSeek

cusor

文生图模型榜单

通用agent和垂直agent

Kubevirt

vfio-pci与igb_uio映射硬件资源到DPDK的流程分析

kubevirt 中文社区

VNCServer 连接方法

[译]深入剖析 Kubernetes MutatingAdmissionWebhook-腾讯云开发者社区-腾讯云

[译]深入剖析 Kubernetes MutatingAdmissionWebhook-腾讯云开发者社区-腾讯云

深入理解 Kubernetes Admission Webhook-阳明的博客

CentOS7 安装 mbedtls和mbedtls-devel

ssl

Caddy: 简化SSL证书管理的利器

ssl 证书续签

护网要求,MindIE 请登录后查看其他文集

ESP-IDF 安装指南

程序员智能体商业化接单实战:从技术宅男到副业月入 2 万的闭环之路

macos 蓝牙无限断开

MindStudio Insight 可视化调优工具试用版_MindStudio_昇腾论坛

服务化自动寻优工具使用过程记录_MindStudio_昇腾论坛

MindStudio Insight千卡数据使用案例_MindStudio_昇腾论坛

【重大更新】MindStudio Insight可视化调优工具介绍!!!(原Ascend Insight工具更名)_MindStudio_昇腾论坛

【MindStudio 训练营第一期】进阶班学习笔记-上_MindStudio_昇腾论坛

【MindStudio 训练营第一期】进阶班学习笔记-下_MindStudio_昇腾论坛

Atlas 800I A2部署funASR记录_昇腾主版块_昇腾论坛

昇腾300i duo推理卡适配 -- PaddleOCR

基于昇腾MindIE开箱部署Qwen2.5-VL-32B,体验更聪明的多模态理解能力

MindIE 2.1.rc1启动Qwen3-30B-A3B报错_MindIE_昇腾论坛

WSL安装英伟达驱动

MindIE 量化

npu-310b 相关的知识启动要是没有 npu-smi info 该咋办

寒武纪 vllm

310P1 的 mindie

MindIE 调整tokens

-

+

首页

Kubernetes 实战总结 - 阿里云 ECS 自建 K8S 集群 Kubernetes 实战总结 - 自定义 Prometheus

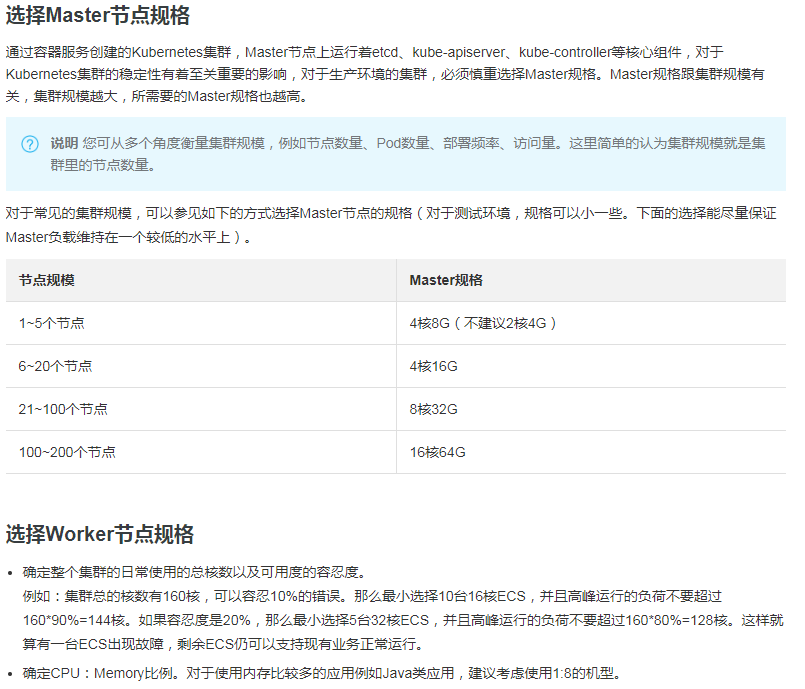

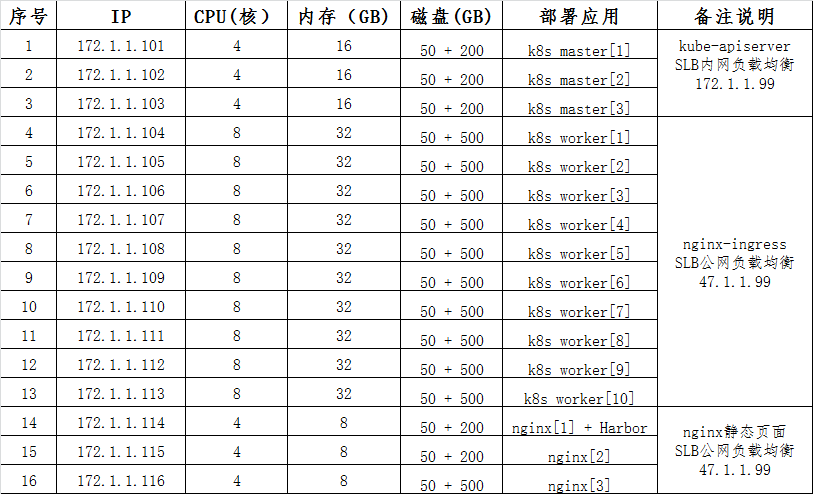

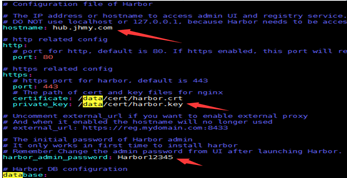

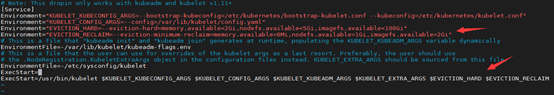

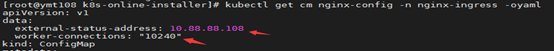

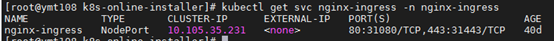

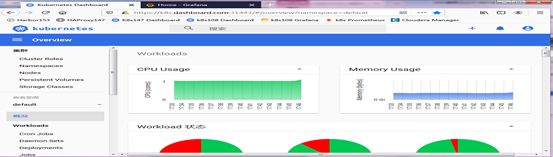

> 本文由 [简悦 SimpRead](http://ksria.com/simpread/) 转码, 原文地址 [www.cnblogs.com](https://www.cnblogs.com/leozhanggg/p/13522155.html) 一、概述 =====  ------------------------------------------------------------------------------------------------- #### 详情参考阿里云说明:[https://help.aliyun.com/document_detail/98886.html?spm=a2c4g.11186623.6.1078.323b1c9bpVKOry](https://help.aliyun.com/document_detail/98886.html?spm=a2c4g.11186623.6.1078.323b1c9bpVKOry) ### 我的项目资源分配(数据库、中间件除外):  二、部署镜像仓库 ======== ### 1) 部署 docker-compose,然后参考下文部署 docker。 ``` $ sudo curl -L "https://github.com/docker/compose/releases/download/1.26.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose $ sudo chmod +x /usr/local/bin/docker-compose $ sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose $ docker-compose --version docker-compose version 1.26.2, build 1110ad01 ``` ### 2) 创建镜像仓库域名证书。 ``` mkdir -p /data/cert && chmod -R 777 /data/cert && cd /data/cert openssl req -x509 -sha256 -nodes -days 3650 -newkey rsa:2048-keyout harbor.key -out harbor.crt -subj "/CN=hub.jhmy.com" ``` ### 3) 下载 harbor 离线包,编辑 harbor.yml,修改主机地址、证书路径、仓库密码。  ### 4) 执行 install.sh 部署,完成之后访问 https://hostip 即可。 ``` 部署流程:检查环境 -> 导入镜像 -> 准备环境 -> 准备配置 -> 开始启动 ```  三、 系统初始化 ========== ### 1) 设置主机名以及域名解析  ``` hostnamectl set-hostname k8s101 cat >> /etc/hosts <<EOF 172.1.1.114 hub.jhmy.com 172.1.1.101 k8s101 172.1.1.102 k8s102 172.1.1.103 k8s103 172.1.1.104 k8s104 …… 172.1.1.99 k8sapi EOF ``` [  ] ### 2) 节点之前建立无密登录 ``` ssh-keygen ssh-copy-id -i .ssh/id_rsa.pub root@k8s-node1 ``` ### 3) 安装依赖包、常用软件,以及同步时间时区 ``` yum -y install vim curl wget unzip ntpdate net-tools ipvsadm ipset sysstat conntrack libseccomp ntpdate ntp1.aliyun.com && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime ``` ### 4) 关闭 swap、selinux、firewalld ``` swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config systemctl stop firewalld && systemctl disable firewalld ``` ### 5) 调整系统内核参数 [  ] ``` cat > /etc/sysctl.d/kubernetes.conf <<EOF net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 net.ipv6.conf.all.disable_ipv6=1 net.ipv4.ip_forward=1 net.ipv4.tcp_tw_recycle=0 vm.swappiness=0 fs.file-max=2000000 fs.nr_open=2000000 fs.inotify.max_user_instances=512 fs.inotify.max_user_watches=1280000 net.netfilter.nf_conntrack_max=524288 EOF modprobe br_netfilter ip_conntrack && sysctl -p /etc/sysctl.d/kubernetes.conf ``` [  ] ### 6) 加载系统 ipvs 相关模块 [  ] ``` cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules sh /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_ ``` [  ] ### 7) 安装 nfs 文件共享服务 ``` yum -y install nfs-common nfs-utils rpcbind systemctl start nfs && systemctl enable nfs systemctl start rpcbind && systemctl enable rpcbind ``` 四、 部署高可用集群 ============ ### 1) 安装部署 docker [  ] ``` # 设置镜像源,安装docker及组件 yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum install -y docker-ce-19.03.5 docker-ce-cli-19.03.5 # 设置镜像加速,仓库地址,日志模式 mkdir /etc/docker cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": ["https://jc3y13r3.mirror.aliyuncs.com"], "insecure-registries":["hub.jhmy.com"], "data-root": "/data/docker", "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" } } EOF # 重启docker,设置启动 mkdir -p /etc/systemd/system/docker.service.d systemctl daemon-reload && systemctl restart docker && systemctl enable docker ``` [  ] ### 2) 安装部署 kubernetes [  ] ``` # 设置kubernetes镜像源 cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF # 安装kubeadm、kebelet、kubectl yum -y install kubeadm-1.17.5 kubelet-1.17.5 kubectl-1.17.5 --setopt=obsoletes=0 systemctl enable kubelet.service ``` [  ] ### 3) 初始化管理节点 任选一台 master 节点,修改当前 master 节点 /etc/hosts,把 k8sapi 对应解析地址修改为当前节点地址(系统初始化时我们统一配置成 slb 负载地址了)。 虽然我们打算利用阿里云的 SLB 进行 kube-apiserver 负载,但是此时集群未启动,无法监听 k8sapi 端口,也就是还无法访问到 SLB 负载的端口, 那么集群初始化将会失败,所以我们暂时先用当前节点地址作为负载地址,也就是自己负载自己,来先实现集群初始化。 **注意:**因为是正式环境,我们尽量修改一些默认值,比如:token、apiserver 端口、etcd 数据路径、podip 网段等。 [  ] ``` # kubeadm config print init-defaults > kubeadm-config.yaml # vim kubeadm-config.yaml ``` ``` apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: token0.123456789kubeadm ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 172.1.1.101 bindPort: 6333 nodeRegistration: criSocket: /var/run/dockershim.sock name: k8s101 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controlPlaneEndpoint: "k8sapi:6333" controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /data/etcd imageRepository: registry.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: v1.17.5 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 10.233.0.0/16 scheduler: {} --- apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration featureGates: SupportIPVSProxyMode: true mode: ipvs ``` ``` # kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log ``` [  ] k8s 主节点初始化完成后,打开阿里云负载均衡配置,增加 SLB 内网对 kube-apiserver 负载配置(这里只能用四层 TCP)。 暂且只配置当前 master 地址,等待其他 master 节点加入成功后再添加,因为其他两台 master 还未加入,此时如果配置其他 master 地址,SLB 负载均衡状态将会异常,那其他节点尝试加入集群将会失败。  ### 4) 加入其余管理节点和工作节点 [  ] ``` # 根据初始化日志提示,执行kubeadm join命令加入其他管理节点。 kubeadm join k8sapi:6333 --token token0.123456789kubeadm \ --discovery-token-ca-cert-hash sha256:56d53268517... \ --experimental-control-plane --certificate-key c4d1525b6cce4.... # 根据日志提示,所有管理节点执行以下命令,赋予用户命令权限。 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config # 根据初始化日志提示,执行kubeadm join命令加入其他工作节点。 kubeadm join k8sapi:6333 --token token0.123456789kubeadm \ --discovery-token-ca-cert-hash sha256:260796226d………… ``` [  ] ``` 注意:token有效期为24小时,失效后请在主节点使用以下命令重新生成 ``` ``` kubeadm token create --print-join-command ``` 修改新加入 master 节点 apiserver 端口,以及补全阿里云 SLB apiserver 负载地址。 ``` # 修改kube-apiserver监听端口 sed -i 's/6443/6333/g' /etc/kubernetes/manifests/kube-apiserver.yaml # 重启kube-apiserver容器 docker restart `docker ps | grep k8s_kube-apiserver | awk '{print $1}'` # 查看kube-apiserver监听端口 ss -anp | grep "apiserver" | grep 'LISTEN' ``` **注意:**如果忘记修改,后面部署可能会出现错误,比如 kube-prometheus ``` [root@ymt-130 manifests]# kubectl -n monitoring logs pod/prometheus-operator-5bd99d6457-8dv29 ts=2020-08-27T07:00:51.38650537Z caller=main.go:199 msg="Starting Prometheus Operator version '0.34.0'." ts=2020-08-27T07:00:51.38962086Z caller=main.go:96 msg="Staring insecure server on :8080" ts=2020-08-27T07:00:51.39038717Z caller=main.go:315 msg="Unhandled error received. Exiting..." err="communicating with server failed: Get https://10.96.0.1:443/version?timeout=32s: dial tcp 10.96.0.1:443: connect: connection refused" ``` ### 5) 部署网络,检查集群健康状况 [  ] ``` # 执行准备好的yaml部署文件 kubectl apply -f kube-flannel.yaml # 检查集群部署情况 kubectl get cs && kubectl get nodes && kubectl get pod --all-namespaces # 检查etcd集群健康状态(需要上传etcdctl二进制文件) [root@k8s101 ~]# etcdctl --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key --endpoints https://172.1.1.101:2379,https://172.1.1.102:2379,https://172.1.1.103:2379 --insecure-skip-tls-verify endpoint health https://172.1.1.101:2379 is healthy: successfully committed proposal: took = 12.396169ms https://172.1.1.102:2379 is healthy: successfully committed proposal: took = 12.718211ms https://172.1.1.103:2379 is healthy: successfully committed proposal: took = 13.174164ms ``` [  ] ### 6) Kubelet 驱逐策略优化 ``` # 修改工作节点kubelet启动参数,更改Pod驱逐策略 ``` ``` vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf Environment="EVICTION_HARD=--eviction-hard=memory.available<2Gi,nodefs.available<5Gi,imagefs.available<100Gi" Environment="EVICTION_RECLAIM=--eviction-minimum-reclaim=memory.available=0Mi,nodefs.available=1Gi,imagefs.available=2Gi" ```  ``` # 重启kubelet容器,并查看kubelet进程启动参数 ``` ``` [root@k8s104 ~]# systemctl daemon-reload && systemctl restart kubelet [root@k8s104 ~]# ps -ef | grep kubelet | grep -v grep [root@k8s104 ~]# ps -ef | grep "/usr/bin/kubelet" | grep -v grep root 24941 1 2 Aug27 ? 03:00:12 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --cgroup-driver=systemd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.1 --eviction-hard=memory.available<2Gi,nodefs.available<5Gi,imagefs.available<100Gi --eviction-minimum-reclaim=memory.available=0Mi,nodefs.available=1Gi,imagefs.available=2Gi ``` **更多信息:**[Kubelet 对资源紧缺状况的应对](https://www.kubernetes.org.cn/1732.html) 五、 部署功能组件 =========== ### 1) 部署七层路由 Ingress ``` # 部署Ingress路由和基础组件转发规则 ``` ``` kubectl apply -f nginx-ingress ``` ``` # 通过修改nginx-config来配置负载地址和最大连接数 ``` ``` kubectl edit cm nginx-config -n nginx-ingress ```  ``` # 可以适当调整Ingress对外开放端口,然后进行阿里云SLB外网工作负载配置(所有工作节点) ```  **更多详情:**[Nginx 全局配置](https://docs.nginx.com/nginx-ingress-controller/configuration/global-configuration/configmap-resource/) ### 2) 部署页面工具 Dashboard ``` # 执行准备好的yaml部署文件 ``` ``` kubectl apply -f kube-dashboard.yml ``` ``` # 等待部署完成 ``` ``` kubectl get pod -n kubernetes-dashboard ```  ``` # 通过域名登录控制页面, Token需要使用命令查看(本地需要配置域名解析) ``` ``` kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep dashboard-admin | awk '{print $1}') ``` ``` https://k8s.dashboard.com:IngressPort ```  ### 3) 部署日志收集 Filebeat ``` # 修改匹配日志、logstash地址、宿主机目录 ```   ``` # 然后执行部署即可 ``` ``` kubectl apply -f others/kube-filebeat.yml ```   [  ] ``` --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-config namespace: kube-system labels: k8s-app: filebeat data: filebeat.yml: |- filebeat.inputs: - type: log paths: - /home/ymt/logs/appdatamonitor/warn.log output.logstash: hosts: ["10.88.88.169:5044"] --- # filebeat.config: # inputs: # # Mounted `filebeat-inputs` configmap: # path: ${path.config}/inputs.d/*.yml # # Reload inputs configs as they change: # reload.enabled: false # modules: # path: ${path.config}/modules.d/*.yml # # Reload module configs as they change: # reload.enabled: false # To enable hints based autodiscover, remove `filebeat.config.inputs` configuration and uncomment this: #filebeat.autodiscover: # providers: # - type: kubernetes # hints.enabled: true # processors: # - add_cloud_metadata: # cloud.id: ${ELASTIC_CLOUD_ID} # cloud.auth: ${ELASTIC_CLOUD_AUTH} # output.elasticsearch: # hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}'] # username: ${ELASTICSEARCH_USERNAME} # password: ${ELASTICSEARCH_PASSWORD} --- # apiVersion: v1 # kind: ConfigMap # metadata: # name: filebeat-inputs # namespace: kube-system # labels: # k8s-app: filebeat # data: # kubernetes.yml: |- # - type: docker # containers.ids: # - "*" # processors: # - add_kubernetes_metadata: # in_cluster: true --- apiVersion: apps/v1 kind: DaemonSet metadata: name: filebeat namespace: kube-system labels: k8s-app: filebeat spec: selector: matchLabels: k8s-app: filebeat template: metadata: labels: k8s-app: filebeat spec: serviceAccountName: filebeat terminationGracePeriodSeconds: 30 containers: - name: filebeat # image: docker.elastic.co/beats/filebeat:6.7.2 image: registry.cn-shanghai.aliyuncs.com/leozhanggg/elastic/filebeat:6.7.1 args: [ "-c", "/etc/filebeat.yml", "-e", ] # env: # - name: ELASTICSEARCH_HOST # value: elasticsearch # - name: ELASTICSEARCH_PORT # value: "9200" # - name: ELASTICSEARCH_USERNAME # value: elastic # - name: ELASTICSEARCH_PASSWORD # value: changeme # - name: ELASTIC_CLOUD_ID # value: # - name: ELASTIC_CLOUD_AUTH # value: securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true resources: limits: memory: 200Mi requests: cpu: 100m memory: 100Mi volumeMounts: - name: config mountPath: /etc/filebeat.yml readOnly: true subPath: filebeat.yml # - name: inputs # mountPath: /usr/share/filebeat/inputs.d # readOnly: true - name: data mountPath: /usr/share/filebeat/data - name: ymtlogs mountPath: /home/ymt/logs readOnly: true # - name: varlibdockercontainers # mountPath: /var/lib/docker/containers # readOnly: true volumes: - name: config configMap: defaultMode: 0600 name: filebeat-config - name: ymtlogs hostPath: path: /home/ymt/logs # - name: varlibdockercontainers # hostPath: # path: /var/lib/docker/containers # - name: inputs # configMap: # defaultMode: 0600 # name: filebeat-inputs # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: data hostPath: path: /var/lib/filebeat-data type: DirectoryOrCreate --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: filebeat subjects: - kind: ServiceAccount name: filebeat namespace: kube-system roleRef: kind: ClusterRole name: filebeat apiGroup: rbac.authorization.k8s.io --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: filebeat labels: k8s-app: filebeat rules: - apiGroups: [""] # "" indicates the core API group resources: - namespaces - pods verbs: - get - watch - list --- apiVersion: v1 kind: ServiceAccount metadata: name: filebeat namespace: kube-system labels: k8s-app: filebeat --- ``` [  ] kube-filebeat.yaml ``` 注意:因为我们logstash和ES均部署在外部,所以这里k8s集群仅部署了filebeat,用于收集日志传输到集群外部logstash。 ``` ### 4) 部署监控平台 Prometheus ``` # 先部署默认组件 ``` ``` cd kube-prometheus-0.3.0/manifests kubectl create -f setup && sleep 5 && kubectl create -f . ``` ``` # 等待部署完成 ``` ``` kubectl get pod -n monitoring ```  ``` # 然后修改自定义监控配置,执行升级脚本 ``` ``` cd custom && sh upgrade.sh ``` ``` * 告警配置:alertmanager.yaml ``` ``` * 默认告警规则:prometheus-rules.yaml ``` ``` * 新增告警规则:prometheus-additional-rules.yaml ``` ``` * 新增监控项配置:prometheus-additional.yaml #调整监控项及地址 ``` ``` * 监控配置:prometheus-prometheus.yaml #调整副本数和资源限制 ``` ``` # 通过域名登录监控页面(本地需要配置域名解析) ``` ``` http://k8s.grafana.com:IngressPort # 默认用户和密码都是admin ``` ``` http://k8s.prometheus.com:IngressPort ``` ``` http://k8s.alertmanager.com:IngressPort ``` ``` # 点击添加按钮 ->Import ->Upload .json file,导入监控仪表板。 ``` ``` * k8s-model.json ``` ``` * node-model.json ```  ### 详情参考:[Kubernetes 实战总结 - 自定义 Prometheus](https://www.cnblogs.com/leozhanggg/p/13502983.html) 五、 其他问题说明 =========== ### 1) Kubectl 命令使用 ``` # 命令自动部署设置 yum install -y bash-completion source /usr/share/bash-completion/bash_completion source <(kubectl completion bash) echo "source <(kubectl completion bash)" >> ~/.bashrc ``` **官方文档:**[Kubernetes kubectl 命令表](http://docs.kubernetes.org.cn/683.html) **网络博文:**[kubernetes 常用命令整理](https://blog.51cto.com/8355320/2474063) ### 2) 延长证书有效期 [  ] ``` # 查看证书有效期 kubeadm alpha certs check-expiration # 重新生成所有证书 kubeadm alpha certs renew all # 分别重启所有主节点组件容器 docker ps | \ grep -E 'k8s_kube-apiserver|k8s_kube-controller-manager|k8s_kube-scheduler|k8s_etcd_etcd' | \ awk -F ' ' '{print $1}' |xargs docker restart ``` [  ] ### 3) 卸载 k8s 集群节点 [  ] ``` # 将要卸载的节点标记为不能再调度 kubectl cordon k8s-node1 # 将该节点上运行的容器平滑迁移到其他节点上 kubectl drain nodeA --delete-local-data --force # 从集群上删除该节点 kubectl delete node k8s-node1 # 在删除的节点上重置配置 kubeadm reset # 根据提示手动删除相应文件 rm -rf /etc/cni/net.d ipvsadm --clear rm -rf /root/.kube/ # 停止 kubelet服务 systemctl stop kubelet # 查看安装过的k8s的软件包 yum list installed | grep 'kube' # 卸载k8s相关安装包 yum remove kubeadm.x86_64 kubectl.x86_64 cri-tools.x86_64 kubernetes-cni.x86_64 kubelet.x86_64 ``` [  ] ### 4) 彻底清除节点网络 [  ] ``` # 重置节点 kubeadm reset -f # 清除配置 rm -rf $HOME/.kube/config /etc/cni/net.d && ipvsadm --clear # 停止docker systemctl stop kubelet && systemctl stop docker # 删除网络配置和路由记录 rm -rf /var/lib/cni/ ip link delete cni0 ip link delete flannel.1 ip link delete dummy0 ip link delete kube-ipvs0 # 重启docker和network systemctl restart docker && systemctl restart kubelet && systemctl restart network ``` [  ](javascript:void(0); "复制代码") ``` # 有时候更换网路插件可能会出现podcidr错误,可以进行手动更改 kubectl describe node k8s112 | grep PodCIDR kubectl patch node k8s112 -p '{"spec":{"podCIDR":"10.233.0.0/16"}}' ``` ### 5) 部署应用到 master 节点 [  ] ``` #增加不可调度容忍和主节点节点亲和性 tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: node-role.kubernetes.io/master operator: Exists ``` [  ] ``` 注意:我们部署k8s dashboard时有时发现使用主节点地址打开特别的卡,但是我们使用部署的节点打开就非常的流畅, 那么我们只需要给dashboard增加此配置,即让dashboard部署在主节点,这样使用主节点打开就会非常的流畅了。 ``` ### 6) 修改 k8s 节点名称 [  ] ``` # 阿里云自建K8S集群可能会出现连接apiserver失败情况,一般是由于K8S在做DNS名称解析的时候出现了较长的解析请求,可以通过修改node名称的方式解决。 hostname ymt-140 vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf Environment="KUBELET_HOSTNAME=--hostname-override=ymt-140" $KUBELET_HOSTNAME systemctl daemon-reload && systemctl restart kubelet && ps -ef | grep /usr/bin/kubelet | grep -v grep journalctl -xe -u kubelet ``` [  ] ### 7) 部署日志记录   [  ] ``` [root@k8s101 ~]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log W0819 09:24:09.326568 28880 validation.go:28] Cannot validate kube-proxy config - no validator is available W0819 09:24:09.326626 28880 validation.go:28] Cannot validate kubelet config - no validator is available [init] Using Kubernetes version: v1.17.5 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s101 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local k8sapi] and IPs [10.96.0.1 172.1.1.101] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s101 localhost] and IPs [172.1.1.101 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s101 localhost] and IPs [172.1.1.101 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" W0819 09:24:14.028737 28880 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-scheduler" W0819 09:24:14.029728 28880 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 16.502551 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 8782750a5ffd83f0fdbe635eced5e6b1fc4acd73a2a13721664494170a154a01 [mark-control-plane] Marking the node k8s101 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s101 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: zwx051.085210868chiscdc [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join k8sapi:6333 --token zwx051.085210868chiscdc \ --discovery-token-ca-cert-hash sha256:de4d9a37423fecd5313a76d99ad60324cdb0ca6a38254de549394afa658c98b2 \ --control-plane --certificate-key 8782750a5ffd83f0fdbe635eced5e6b1fc4acd73a2a13721664494170a154a01 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join k8sapi:6333 --token zwx051.085210868chiscdc \ --discovery-token-ca-cert-hash sha256:de4d9a37423fecd5313a76d99ad60324cdb0ca6a38254de549394afa658c98b2 [root@k8s102 ~]# kubeadm join k8sapi:6333 --token zwx051.085210868chiscdc \ > --discovery-token-ca-cert-hash sha256:de4d9a37423fecd5313a76d99ad60324cdb0ca6a38254de549394afa658c98b2 \ > --control-plane --certificate-key 8782750a5ffd83f0fdbe635eced5e6b1fc4acd73a2a13721664494170a154a01 [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [preflight] Running pre-flight checks before initializing the new control plane instance [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [download-certs] Downloading the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s101 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local k8sapi] and IPs [10.96.0.1 172.1.1.102] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s101 localhost] and IPs [172.1.1.102 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s101 localhost] and IPs [172.1.1.102 127.0.0.1 ::1] [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Valid certificates and keys now exist in "/etc/kubernetes/pki" [certs] Using the existing "sa" key [kubeconfig] Generating kubeconfig files [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" W0819 10:31:17.604671 4058 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-controller-manager" W0819 10:31:17.612645 4058 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-scheduler" W0819 10:31:17.613524 4058 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [check-etcd] Checking that the etcd cluster is healthy [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... [etcd] Announced new etcd member joining to the existing etcd cluster [etcd] Creating static Pod manifest for "etcd" [etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s {"level":"warn","ts":"2020-08-19T10:31:31.039+0800","caller":"clientv3/retry_interceptor.go:61","msg":"retrying of unary invoker failed","target":"passthrough:///https://172.1.1.102:2379","attempt":0,"error":"rpc error: code = DeadlineExceeded desc = context deadline exceeded"} [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [mark-control-plane] Marking the node k8s101 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s101 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] This node has joined the cluster and a new control plane instance was created: * Certificate signing request was sent to apiserver and approval was received. * The Kubelet was informed of the new secure connection details. * Control plane (master) label and taint were applied to the new node. * The Kubernetes control plane instances scaled up. * A new etcd member was added to the local/stacked etcd cluster. To start administering your cluster from this node, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Run 'kubectl get nodes' to see this node join the cluster. ``` [  ] kubeadm-init.log 作者:[Leozhanggg](https://www.cnblogs.com/leozhanggg/) 出处:[https://www.cnblogs.com/leozhanggg/p/13522155.html](https://www.cnblogs.com/leozhanggg/p/13522155.html) 本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

yg9538

2022年8月12日 23:09

2155

转发文档

收藏文档

上一篇

下一篇

手机扫码

复制链接

手机扫一扫转发分享

复制链接

Markdown文件

Word文件

PDF文档

PDF文档(打印)

分享

链接

类型

密码

更新密码

有效期